-

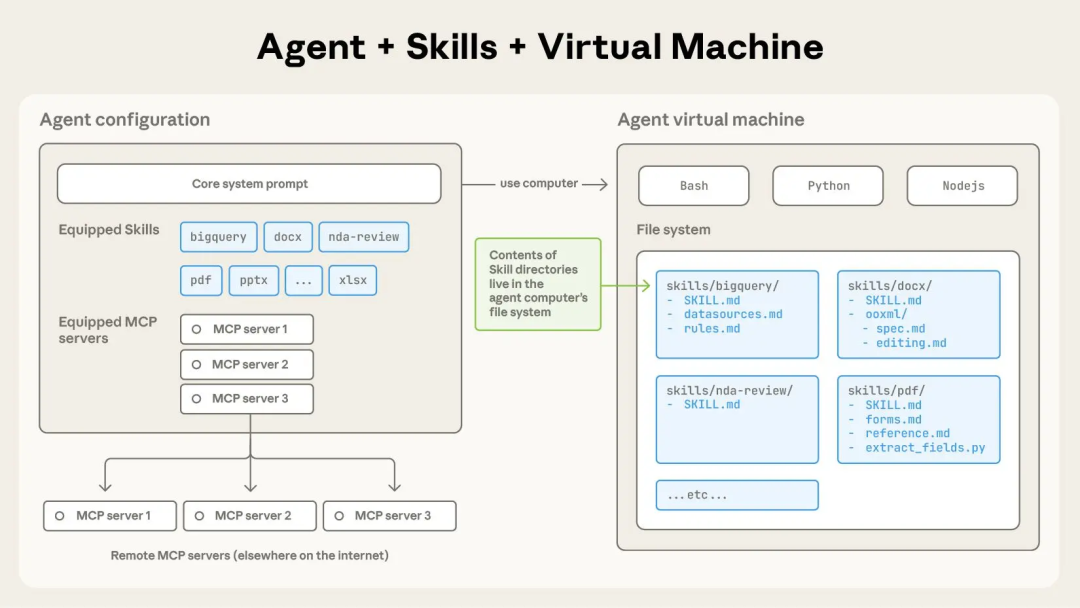

Claude can now use Skills to improve how the model performs specific tasks. Skills are essentially folders containing instructions, scripts, and resources that the Claude model can load when needed.

-

Claude will only invoke a Skill when it’s relevant to the task at hand. Using a Skill, Claude can better complete specific tasks, such as using Excel or adhering to an organization’s internal brand guidelines.

Try it out

This toolkit is for creating animated GIFs optimized for Slack, including a size constraint validator and composable animation components. This skill is available when a user requests an animated GIF or emoji for Slack with the description “Make me a Slack GIF of someone doing something.”

Generates a GIF for me to send to Slack showing how much cooler Skills are than MCP.

# 首先将 skill 目录添加至 Python 路径import syssys.path.insert(0, '/mnt/Skills/examples/slack-gif-creator')

from PIL import Image, ImageDraw, ImageFont# 此类位于 skill 的 core/ 目录之下

from core.gif_builder import GIFBuilder# ... 构建 GIF 动图的代码 ...# 将结果保存至磁盘:

info = builder.save('/mnt/user-data/outputs/Skills_vs_mcps.gif',num_colors=128,optimize_for_emoji=False)

print(f"GIF created successfully!")

print(f"Size: {info['size_kb']:.1f} KB ({info['size_mb']:.2f} MB)")

print(f"Frames: {info['frame_count']}")

print(f"Duration: {info['duration_seconds']:.1f}s")# 使用 check_slack_size() 函数确保文件足够小,适用于 Slack 发送:

passes, check_info = check_slack_size('/mnt/user-data/outputs/Skills_vs_mcps.gif', is_emoji=False)

if passes:print("✓ Ready for Slack!")

else:

print(f"⚠ File size: {check_info['size_kb']:.1f} KB (limit: {check_info['limit_kb']} KB)")

Skills depend on the coding environment

This is also a common pattern in current large-scale model tools. The ChatGPT code interpreter released in early 2023 is a typical example. This pattern of expansion to the local machine was subsequently applied in coding agent tools such as Cursor, Claude Code, Codex CLI, and Gemini CLI.

This characteristic also became the biggest difference between Skills and other previous large-scale model extensions (such as MCP and ChatGPT plugins). However, as a major dependency, the sheer number of new features released by Skills also confused many users.

Skills are both powerful and easy to create, so it’s crucial to find ways to provide a more secure coding environment for large models. While emphasizing security in prompts is effective, it would be even better to figure out how to sandbox the runtime environment to limit attacks like prompt injection to acceptable levels of damage.

Claude Code acts as a general agent

But it turns out I was completely wrong, and 2025 is undoubtedly the year of the true “intelligent body”.

I can only say that Claude Code isn’t a good name. It’s more than just a coding tool; it’s a general-purpose computer automation tool. Anything that could previously be accomplished by typing commands can now be automated by Claude Code. Therefore, we can consider it a general-purpose intelligent agent, and the emergence of Skills further supports this conclusion.

I’ve discovered that the potential for Skills is truly immense. For example, we could create a folder full of Skills to easily handle the following tasks:

-

Obtain census data and analyze its structure.

-

Use appropriate Python libraries to load data in various formats into SQLite or DuckDB.

-

Publish the data online as Parquet files in an S3 bucket or push it to Datasette Cloud as a table.

-

Use an experienced data reporting skill to quickly uncover interesting stories in a new set of data.

-

Use another skill to create clear, easy-to-read data visualizations using D3.

Congratulations! You’ve just built a data journalism agent that can discover and help publish stories based on the latest census data. And you did it all with just a folder full of Markdown files and example Python scripts.

Skills vs. MCP

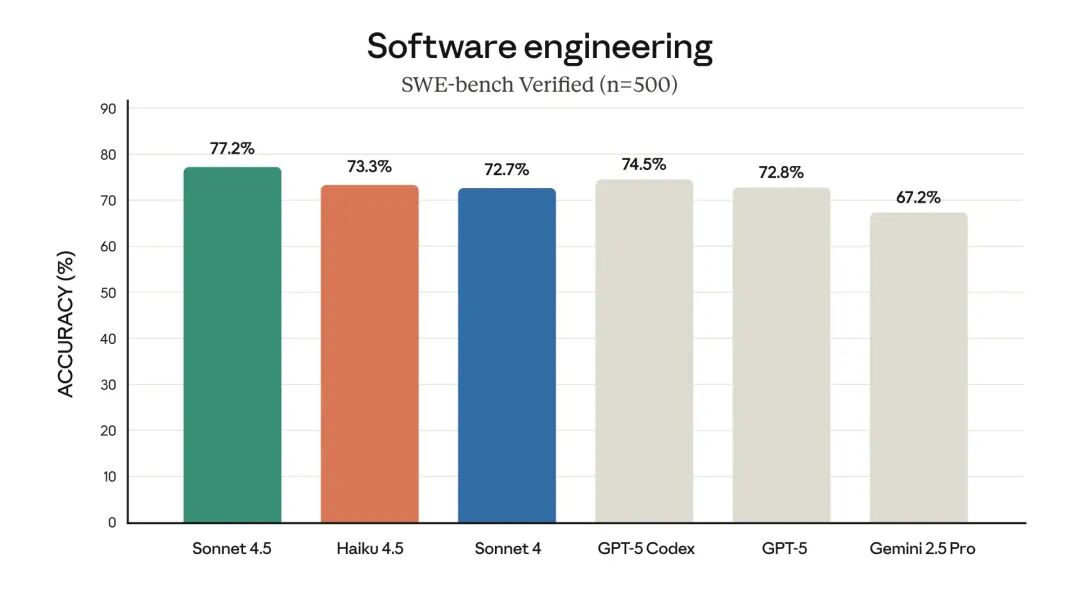

However, over time, the limitations of MCP began to emerge. The most notable one was its massive token consumption: GitHub’s official MCP itself consumes a huge number of context tokens. The greater this consumption, the less room the large model itself has to play a practical role.

Since I started using coded agents in earnest, my interest in MCP has waned. I’ve discovered that almost everything I once needed MCP for can now be done with the CLI. The large model knows how to call cli-tool –help, so we no longer need to waste tokens describing how to use it—the model handles it automatically when necessary.

Skills have the same advantages, but I don’t even need to implement a new CLI tool myself. I can just use Markdown files to describe how to complete a task, and then introduce additional scripts when necessary to ensure reliability or efficiency.

Skills are coming

One of the most exciting things about Skills is how easily they can be shared. I anticipate a lot of Skills will be implemented as single files, with more complex Skills taking the form of folders containing more files.

I’m also thinking about what skills I can build, such as how to develop a Datasette plug-in.

Another advantage of Skills design is that it can be used in conjunction with other models.

We can first prepare a Skills folder, point the Codex CLI or Gemini CLI to it, and then ask it to “read the file pdf/SKILL.md and create a PDF file describing this project for me.” This way, even if the tools and models themselves do not have systematic skill knowledge, the whole process can work normally.

I believe there will be a Cambrian explosion in the Skills ecosystem, and even this year’s MCP craze will seem tame in comparison.

Simplicity is the key

Some people have also expressed opposition to Skills, saying they are too simple and hardly advanced features.

Others have experimented with putting more directives directly into Markdown files and requiring the coding agent to read the file before performing tasks. For example, AGENTS.md has become a mature model, and its file can already contain instructions such as “read the contents of PDF.md before trying to create a PDF.”

But the natural simplicity of Skills is exactly what excites me so much.

MCP is a complete protocol specification covering hosts, clients, servers, resources, prompt words, tools, sampling, root directories, heuristics, and three different transports (stdio, streaming HTTP, and SSE).

Skills, on the other hand, are simply Markdown text, with a little YAML metadata and some optional scripts, and can run in any environment. They’re closer to the essence of large models: provide some text and let the model figure things out.

Skills offload all the complexity to the large model framework and its corresponding computing environment. Looking at the evolution of large model runtime tools over the past few years, this may be the most sensible and practical direction to explore.