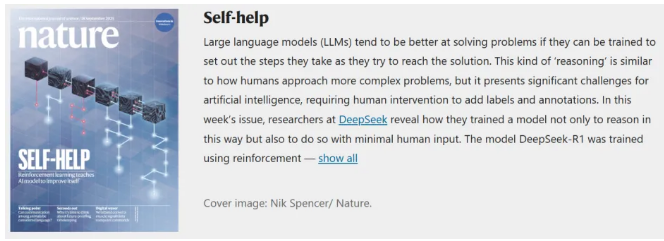

DeepSeek R1 Becomes First Peer-Reviewed Large Language Model Featured in Nature

On September 18, the field of large language models (LLMs) achieved a milestone. The DeepSeek team made the cover of the top-tier academic journal Nature with their research paper on DeepSeek R1, becoming the first large language model to pass authoritative peer review. This event not only demonstrates the technological innovation of DeepSeek R1 but also sets a new academic benchmark for the AI industry.

The Nature editorial team noted that in an era of rapid AI development and rampant hype, DeepSeek’s approach provides an effective strategy for the industry. Through rigorous, independent peer review, transparency and reproducibility in AI research are enhanced, reducing societal risks from unverified technical claims. The editors encouraged more AI companies to follow DeepSeek’s example to promote healthy development in the field.

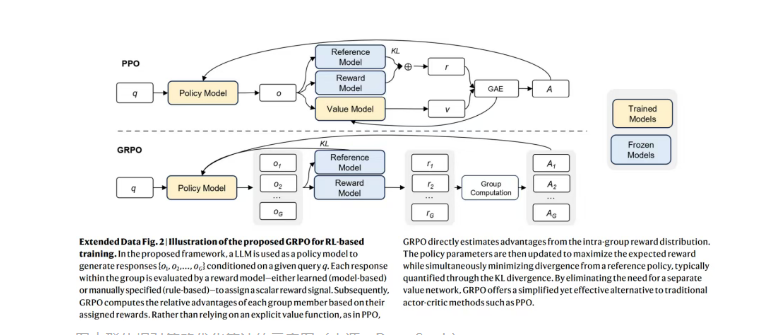

The paper details DeepSeek R1’s innovative reasoning capability training method. Unlike traditional fine-tuning that relies on human-labeled examples, this model uses reinforcement learning (RL) in autonomous environments without any human-provided examples, allowing it to develop complex reasoning skills. The results are striking: in the AIME2024 math competition, DeepSeek R1’s performance jumped from 15.6% to 71.0%, reaching a level comparable to OpenAI’s models.

A diagram illustrating the Collective Relative Strategy Optimization Algorithm (Source: DeepSeek)

During several months of peer review, eight experts provided valuable feedback, prompting the DeepSeek team to revise and refine technical details multiple times. Despite these achievements, the team acknowledged ongoing challenges, such as readability and mixed-language handling. To address these, DeepSeek employed a multi-stage training framework combining rejection sampling and supervised fine-tuning, further enhancing the model’s writing capabilities and overall performance.

The successful publication of DeepSeek R1 marks a shift toward more scientific, rigorous, and reproducible AI foundational model research. This breakthrough sets a new paradigm for future AI research and is expected to drive the industry toward a more transparent and open development path.