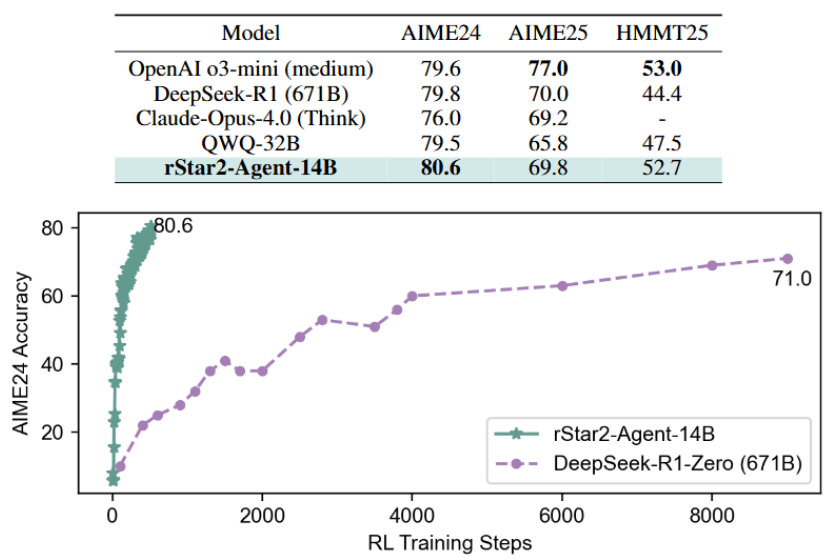

Microsoft has recently achieved a significant breakthrough in the AI field by open-sourcing a new AI Agent inference model named rStar2-Agent. This model uses an innovative agent reinforcement learning approach, and surprisingly, despite having only 14 billion parameters, it achieved an accuracy of 80.6% on the AIME24 math reasoning test, successfully surpassing the 671 billion-parameter DeepSeek-R1 (79.8%). This performance prompts a reevaluation of the relationship between model size and performance.

Impressive Performance Across Multiple Benchmarks

Impressive Performance Across Multiple Benchmarks

In addition to its excellent results in math reasoning, rStar2-Agent has performed remarkably well in other areas. On the GPQA-Diamond science reasoning benchmark, the model achieved an accuracy of 60.9%, outperforming DeepSeek-V3’s 59.1%. In the BFCL v3 agent tool-use task, its task completion rate reached 60.8%, also higher than DeepSeek-V3’s 57.6%. These results demonstrate the model’s powerful generalization capabilities across various tasks.

Three Key Innovations

To achieve this breakthrough, Microsoft made three major innovations in its training infrastructure, algorithms, and training pipeline.

- Infrastructure: Microsoft built an efficient, isolated code execution service capable of handling a massive number of training requests. It supports up to 45,000 concurrent tool calls per training step with an average latency of just 0.3 seconds.

- Algorithm: Microsoft introduced the new GRPO-RoC algorithm, which uses effective reward mechanisms and algorithmic optimizations to make the model more accurate and efficient during inference.

- Training Pipeline: rStar2-Agent was designed with a highly efficient “non-inference fine-tuning + multi-stage reinforcement learning” pipeline to ensure a steady improvement in the model’s capabilities at every stage.

This series of technical breakthroughs has put rStar2-Agent in the spotlight in the field of AI agents, paving a new direction for future research and applications in intelligent agents.

Impressive Performance Across Multiple Benchmarks

Impressive Performance Across Multiple Benchmarks